From surveillance and access control to smart factories and predictive maintenance, the deployment of artificial intelligence (AI) built around machine learning (ML) models is becoming ubiquitous in industrial IoT edge-processing applications. With this ubiquity, the building of AI-enabled solutions has become ‘democratized’ – moving from being a specialist discipline of data scientists to one embedded system designers are expected to understand.

The challenge of such democratization is that designers are not necessarily well-equipped to define the problem to be solved and to capture and organize data most appropriately. Unlike consumer solutions, there are few datasets for industrial AI implementations, meaning they often have to be created from scratch, from the user’s data.

Into the Mainstream

AI has gone mainstream, and deep learning and machine learning (DL and ML, respectively) are behind many applications we now take for granted, such as natural language processing, computer vision, predictive maintenance, and data mining. Early AI implementations were cloud- or server-based, requiring immense processing power, storage, and high bandwidth between AI/ML applications and the edge (endpoint). And while such set-ups are still needed for generative AI applications such as ChatGPT, DALL-E and Bard, recent years have seen the arrival of edge-processed AI, where data is processed in real-time at the point of capture.

Edge processing greatly reduces reliance on the cloud, makes the overall system/application faster, requires less power, and costs less. Many also consider security to be improved, but it may be more accurate to say the main security focus shifts from protecting in-flight communications between the cloud and the endpoint to making the edge device more secure.

AI/ML at the edge can be implemented on a traditional embedded system, for which designers have access to powerful microprocessors, graphical processing units, and an abundance of memory devices; resources akin to a PC. However, there is a growing demand for IoT devices (commercial and industrial) to feature AI/ML at the edge, and they typically have limited hardware resources and, in many cases, are battery-powered.

The potential for AI/ML at the edge running on resource- and power-restricted hardware has given rise to the term TinyML. Example uses exist in industry (for predictive maintenance, for instance), building automation (environmental monitoring), construction (overseeing the safety of personnel), and security.

The Data Flow

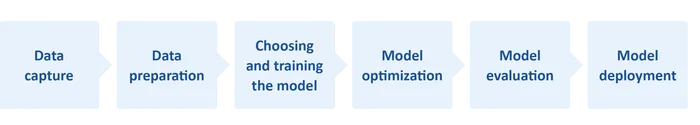

AI (and by extension its subset ML), requires a workflow from data capture/collection to model deployment (Figure 1). Where TinyML is concerned, optimization throughout the workflow is essential because of limited resources within the embedded system.

For instance, TinyML resource requirements are a processing speed of 1 to 400 MHz, 2 to 512 KB of RAM, and 32 KB to 2 MB of storage (Flash). Also, at 150 µW to 23.5 mW, operating within such a small power budget often proves challenging.

Figure 1. A simplified AI workflow flow. The model deployment must itself feed data back into the flow, possibly even influencing the collection of data. (Microchip)

There is a bigger consideration, or rather trade-off when it comes to embedding AI into a resource-restricted embedded system. Models are crucial to system behavior, but designers often make compromises between model quality/accuracy, which influences system reliability/dependability and performance; chiefly operating speed and power draw.

Another key factor is deciding which type of AI/ML to employ. Generally, three types of algorithms can be used: supervised, unsupervised, and reinforced.

Creating Viable Solutions

Even designers who understand AI and ML well may struggle to optimize each stage of the AI/ML workflow and strike the perfect balance between model accuracy and system performance. So, how can embedded designers with no previous experience address the challenges?

Firstly, do not lose sight of the fact that models deployed on resource-restricted IoT devices will be efficient if the model is small and the AI task is limited to solving a simple problem.

Fortunately, the arrival of ML (and particularly TinyML) into the embedded systems space has resulted in new (or enhanced) integrated development environments (IDEs), software tools, architectures, and models – many of which are open source. For example, TensorFlow™ Lite for microcontrollers (TF Lite Micro) is a free and open-source software library for ML and AI. It was designed to implement ML on devices with just a few KB of memory. Also, programs can be written in Python, which is also open-source and free.

As for IDEs, Microchip’s MPLAB® X is an example of one such environment. This IDE can be used with the company’s MPLAB ML, an MPLAB X plug-in specially developed to build optimized AI IoT sensor recognition code. Powered by AutoML, MPLAB ML fully automates each step of the AI ML workflow, eliminating the need for repetitive, tedious, and time-consuming model building. Feature extraction, training, validation, and testing ensures optimized models that satisfy the memory constraints of microcontrollers and microprocessors, allowing developers to quickly create and deploy ML solutions on Microchip Arm® Cortex-based 32-bit MCUs or MPUs.

Optimizing Workflow

Workflow optimization tasks can be simplified by starting with off-the-shelf datasets and models. For instance, if an ML-enabled IoT device needs image recognition, starting with an existing dataset of labeled static images and video clips for model training (testing and evaluating) makes sense; noting that labeled data is required for supervised ML algorithms.

Many image datasets already exist for computer vision applications. However, as they are intended for PC-, server- or cloud-based applications, they tend to be large. ImageNet, for instance, contains more than 14 million annotated images.

Depending on the ML application, only a few subsets might be required; say many images of people but only a few of inanimate objects. For instance, if ML-enabled cameras are to be used on a construction site, they could immediately raise an alarm if a person not wearing a hard hat comes into its field of view. The ML model will need to be trained but, possibly, using only a few images of people wearing or not wearing hard hats. However, there might need to be a larger dataset for hat types and a sufficient range within the dataset to allow for various factors such as different lighting conditions.

Having the correct live (data) inputs and dataset, preparing the data and training the model account for steps 1 to 3 in Figure 1. Model optimization (step 4) is typically a case of compression, which helps reduce memory requirements (RAM during processing and NVM for storage) and processing latency.

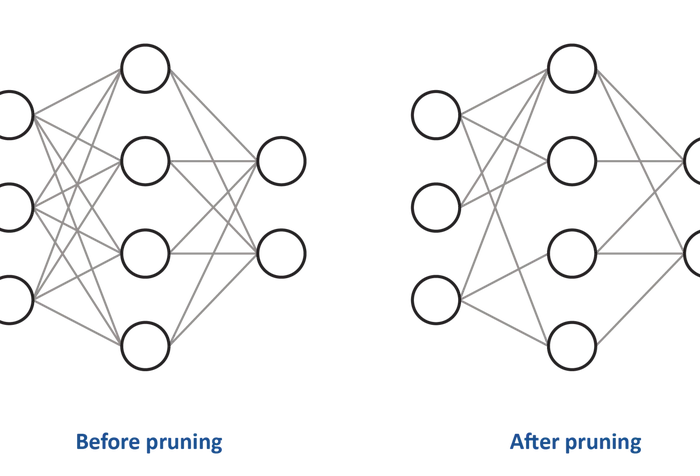

Regarding the processing, many AI algorithms such as convolutional neural networks (CNNs), struggle with complex models. A popular compression technique is pruning (see Figure 2), of which there are four types: weight pruning, unit/neuron pruning, and iterative pruning.

Figure 2. Pruning reduces the density of the neural network. Above, the weight of some of the connections between the neurons has been set to zero. (Microchip)

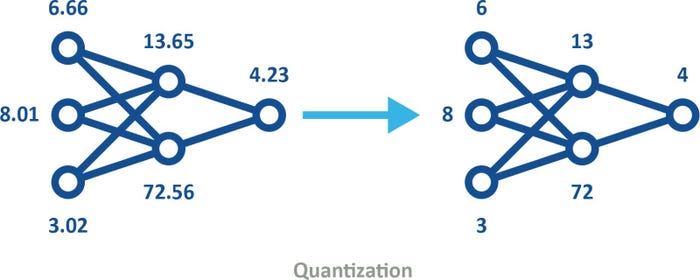

Quantization is another popular compression technique. This process converts data in a high-precision format, such as floating-point 32-bit (FP32), to a lower-precision format, say an 8-bit integer (INT8). The use of quantized models (see Figure 3) can be factored into machine training in one of two ways.

•Post-training quantization involves using models in, say FP32 format and when the training is considered complete, quantizing for deployment. For example, standard TensorFlow can be used for initial model training and optimization on a PC. The model can then be quantized and, through TensorFlow Lite, embedded into the IoT device.

•Quantization-aware training emulates inference-time quantization, creating a model downstream tools will be used to produce quantized models.

Figure 3. Quantized models use lower precision, thus reducing memory and storage requirements and improving energy efficiency, while still preserving the same shape. (Microchip)

While quantization is useful, it should not be used excessively, as it can be analogous to compressing a digital image by representing colors with fewer bits, or by using fewer pixels.

Summary

AI is now well and truly in the embedded systems space. However, this democratization means design engineers who have not previously needed to understand AI and ML are faced with the challenges of implementing AI-based solutions into their designs.

While the challenge of creating ML applications can be daunting, it is not new, at least not for seasoned embedded system designers. The good news is that a wealth of information (and training) is available within the engineering community, IDEs such as MPLAB X, model builders such as MPLAB ML, and open-source datasets and models. This ecosystem helps engineers with varying levels of understanding speed AL and ML solutions that can now be implemented on 16-bit and even 8-bit microcontrollers.

Yann LeFaou is Associate Director for Microchip’s touch and gesture business unit. In this role, LeFaou leads a team developing capacitive touch technologies and also drives the company’s Machine learning (ML) initiative for microcontrollers and microprocessors. He has held a series of successive technical and marketing roles at Microchip, including leading the company’s global marketing activities of capacitive touch, human machine interface and home appliance technology. LeFaou holds a degree from ESME Sudria in France.